In the world of artificial intelligence, not all models are created equal. Some systems are based on complete transparency, while others, like Black Box AI, for example, keep their decision-making under wraps. But what exactly is Black Box AI, and why is it both powerful and controversial?

Black Box AI refers to complex artificial intelligence systems whose internal workings are not easily understood, even by their creators. Here is a brief overview to get you an idea of the system.

What is Black Box AI – An Overview

A black box, in a general sense, is an impenetrable system. While inputs and outputs can be observed, the decision-making process within remains a “black box,” making it difficult to understand how the AI arrived at its conclusions.

The Black box AI models might also create problems related to flexibility (updating the model as needs change), bias and vested interest (incorrect results that may offend or damage some groups of humans), accuracy validation (hard to validate or trust the results), and security (unknown flaws make the model susceptible to cyberattacks).

In Europe, a high-level group of experts has even proposed instituting “seven requirements for trustworthy AI” as a way to address what the group calls “a major concern for society.”

Think it’s not that deep? Well, think of it this way: You ask AI about a medical condition and it, in turn, suggests a questionable response, or you ask something related to your beliefs and it gives you a response that completely disrespects and shatters your ideologies. Will you then not want to know the reason behind it?

Imagine how it will feel, after all this, the same AI that hurt your beliefs also refuses to give you an explanation.

How Does Black Box AI Work?

Black Box AI uses algorithms that mimic the human brain, especially deep learning architectures like neural networks. These systems process vast amounts of data, recognise patterns, and “learn” through trial and error over time without clear, explainable logic.

The more layers, the more sophisticated the model is, but here is a fallout: it also becomes difficult to decipher why it made a particular decision.

Here’s how it works in a nutshell:

- Training Phase: The AI is fed a large dataset and “learns” patterns by adjusting internal para, and it, in turn, suggests a questionable response, or you ask something related to your beliefs, meters.

- Decision Phase: Once trained, the AI can make predictions or classifications based on new input.

- No Clear Trail: Unlike traditional programming, we can’t see exactly why it made a certain decision.

So while the outputs are often impressively accurate, the lack of visibility makes it hard to trust the system, especially in high-stakes scenarios. The black box is also a black hole when it comes to logic.

Why are Black Box AI Models Used?

Despite their lack of transparency, Black Box AI models are popular for a reason. Their complexity enables them to deliver exceptional results in many domains.

1. They Deliver Unmatched Accuracy and Performance

Black Box models, especially deep learning systems, often outperform traditional AI methods. Their ability to detect subtle patterns in data makes them ideal for tasks like image recognition, natural language processing and predictive analytics.

In fields like medical diagnostics, fraud detection and autonomous driving, these models consistently outperform traditional rule-based systems. When the goal is peak accuracy, businesses are often willing to accept a bit of mystery in exchange for better results.

2. They Handle Massive, Complex Datasets

Whether it’s analysing millions of financial transactions or medical records, Black Box AI thrives on big data. Their layered structure helps them digest and learn from complicated data types. The more data you throw at a Black Box AI, the better it gets.

This ability makes them invaluable in areas like coding, genomics and finance recommendations.

3. They Protect Intellectual Property

One of the biggest reasons companies favour Black Box AI is secrecy. For companies developing proprietary models, the “black box” nature can act as a shield, preventing competitors from replicating their systems. It’s a strategic way to protect innovation.

Sure, this lack of transparency might be frustrating for regulators and end users, but for businesses? It improves the confidentiality of data.

4. They Reduce Human Bias

While AI can certainly inherit bias from its training data, it doesn’t have personal opinions, political leanings, or a bad mood. Basically, no human subjectivity, so the AI-driven results are entirely objective and based on statistics and real-time data.

Properly trained AI can help eliminate the personal biases that humans might bring into decision-making, especially in hiring, medical diagnosis, or loan approvals.

5. They Scale Exceptionally Well

Once trained, Black Box models can process new data in real-time, making them highly scalable for commercial use and overtaking human capabilities. Whether it’s approving loan applications, analysing customer sentiment, or detecting cybersecurity threats, these models operate at a scale that would be impossible for human analysts.

Businesses love them because they automate complex tasks while maintaining (or improving) accuracy.

Issues With Black Box AI

As powerful as Black Box AI is, it comes with serious concerns, especially when it’s used in sensitive areas like healthcare, law enforcement, or finance.

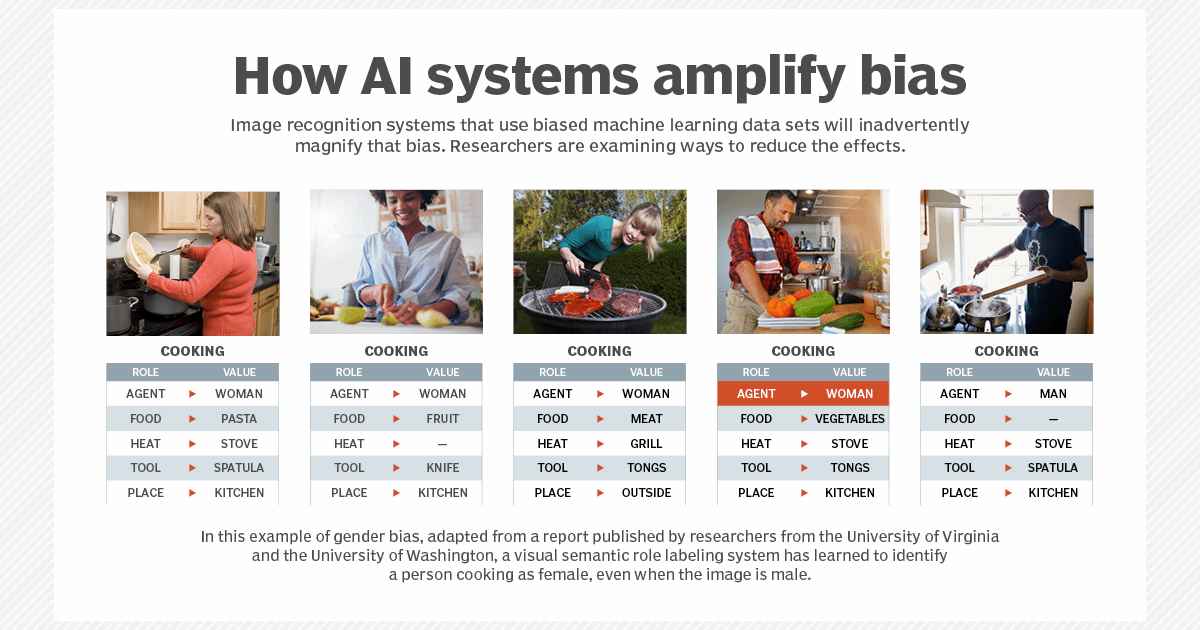

1. AI Bias

Suppose the deep learning neural networks or training data contain biased information as a reflection of conscious or unconscious prejudices on the part of the developers. In that case, the AI will absorb and amplify those biases. Since we can’t see how it makes decisions, detecting and correcting this bias becomes difficult.

For example, AI is getting used to draw a conclusion on a certain medical research, but the data it is fed only considers male problems, genetics and issues, even when the conclusion is to be applied to all genders alike. What will happen next?

The conclusion will be reached, but gender differences will be ignored, and since it doesn’t provide any transparency, chances are that this issue gets unnoticed and the findings are applied to all generically when it was never generic to start with.

2. Lack of Transparency and Accountability

The complexity of black box AI models can prevent developers from properly understanding and auditing them, even if they produce accurate results. Such a lack of understanding leads to reduced transparency and minimises a sense of accountability.

When AI makes a critical decision like rejecting a loan application, there’s often no explanation available. That’s problematic for users and regulators alike.

Here is how a white box AI model would have worked in comparison:

| Stage | Details |

|---|---|

| White Box AI | A form of Artificial Intelligence that is explainable and transparent. |

| Machine Learning Model | – Uses explainable algorithms- Operates in a transparent manner |

| Key Characteristics | – Transparent decision-making- Human-readable logic- Interpretability- Traceable outcomes- Auditability |

| Benefits | – Builds trust in AI systems- Easier to debug and improve models- Complies with regulations (e.g., GDPR)- Enables ethical AI practices- Supports fairness and accountability |

3. Lack of Flexibility

Black Box systems are trained for specific tasks. Making them switch or adapt to a new domain is difficult and usually requires retraining the entire model from scratch.

If the model needs to be changed for a different use case, then the update might require a lot of work.

4. Difficult to Validate Results

In traditional systems, you can trace the logic to validate a result. With Black Box AI, you often have to trust the outcome without truly understanding it, which isn’t ideal in regulated industries.

This is one reason why it’s not advisable to process sensitive data using a black box AI model.

5. Security Flaws

Since the internal logic is opaque, it’s hard to detect if a model has been tampered with or misled by adversarial inputs, making it vulnerable to security threats.

For instance, the developer or a third party could change the data to influence the model’s judgment so that it makes incorrect or even dangerous decisions. And since there’s no way to reverse engineer the model’s decision-making process, it will be almost impossible to stop it from making bad decisions.

When Should Black Box AI Be Used?

Despite the concerns, there are specific scenarios where Black Box AI makes sense:

- When high accuracy is more important than the ability to explain (e.g., facial recognition).

- In environments with massive data that’s too complex for rule-based systems.

- For commercial applications where IP protection is a priority.

The key is ethical and responsible deployment. Transparency tools like explainable AI (XAI) and model interpretability frameworks are helping bridge the trust gap.

Black Box AI Applications

Black Box AI is already embedded in many industries we interact with daily, even if we don’t realise it.

Automotive

Self-driving cars use Black Box AI to navigate streets, identify obstacles, and make split-second decisions that are far too quick and complex for traditional systems.

Manufacturing

Predictive maintenance and smart automation in factories rely on deep learning models to spot equipment issues before they cause downtime.

Financial Services

From fraud detection to credit scoring, banks use Black Box models to make fast, data-driven decisions that would take humans hours or sometimes days.

Healthcare

Medical imaging, diagnostics, and personalised medicine benefit from AI’s pattern recognition abilities, but also raise ethical concerns due to the lack of explainability.

Final Verdict

Black Box AI, like other AI models in the world (i.e ChatGPT and DeepSeek), is a double-edged sword. It offers incredible power, speed, and performance, but at the cost of transparency and trust.

As AI continues to shape industries and lives, the challenge is to strike the right balance between leveraging its capabilities and ensuring ethical/explainable use.

Stay tuned to Brandsynario for the latest news and updates