Karachi, July 24, 2025: Meta has announced a set of new tools and updates aimed at strengthening protection for teens and children on its platforms, including Instagram and Facebook. These updates include improvements to direct messaging for teen users, expanded nudity protection, and enhanced safeguards for adult-managed accounts that primarily feature children.

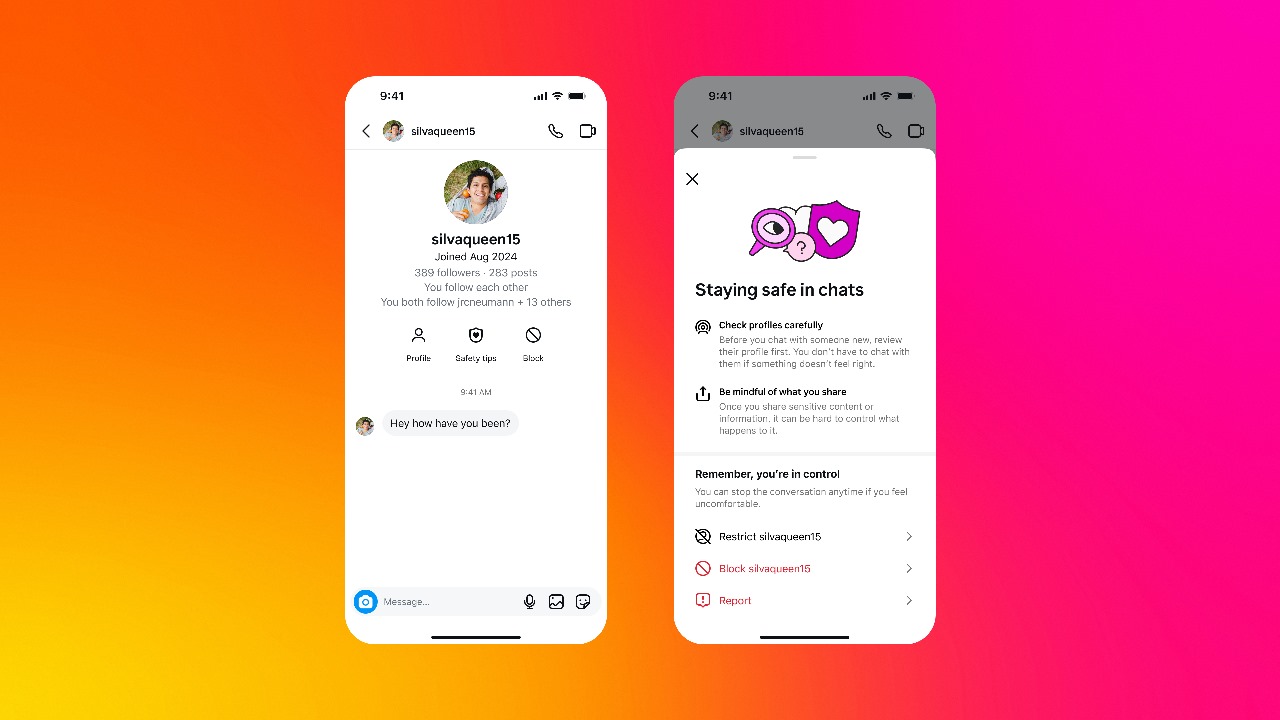

Teen Accounts will now display more contextual information about who people are messaging with, including safety tips, account creation date, and a new option to block and report a message sender in one go. In June alone, teens used Meta’s safety notices to block 1 million accounts and reported another 1 million after seeing a safety notice.

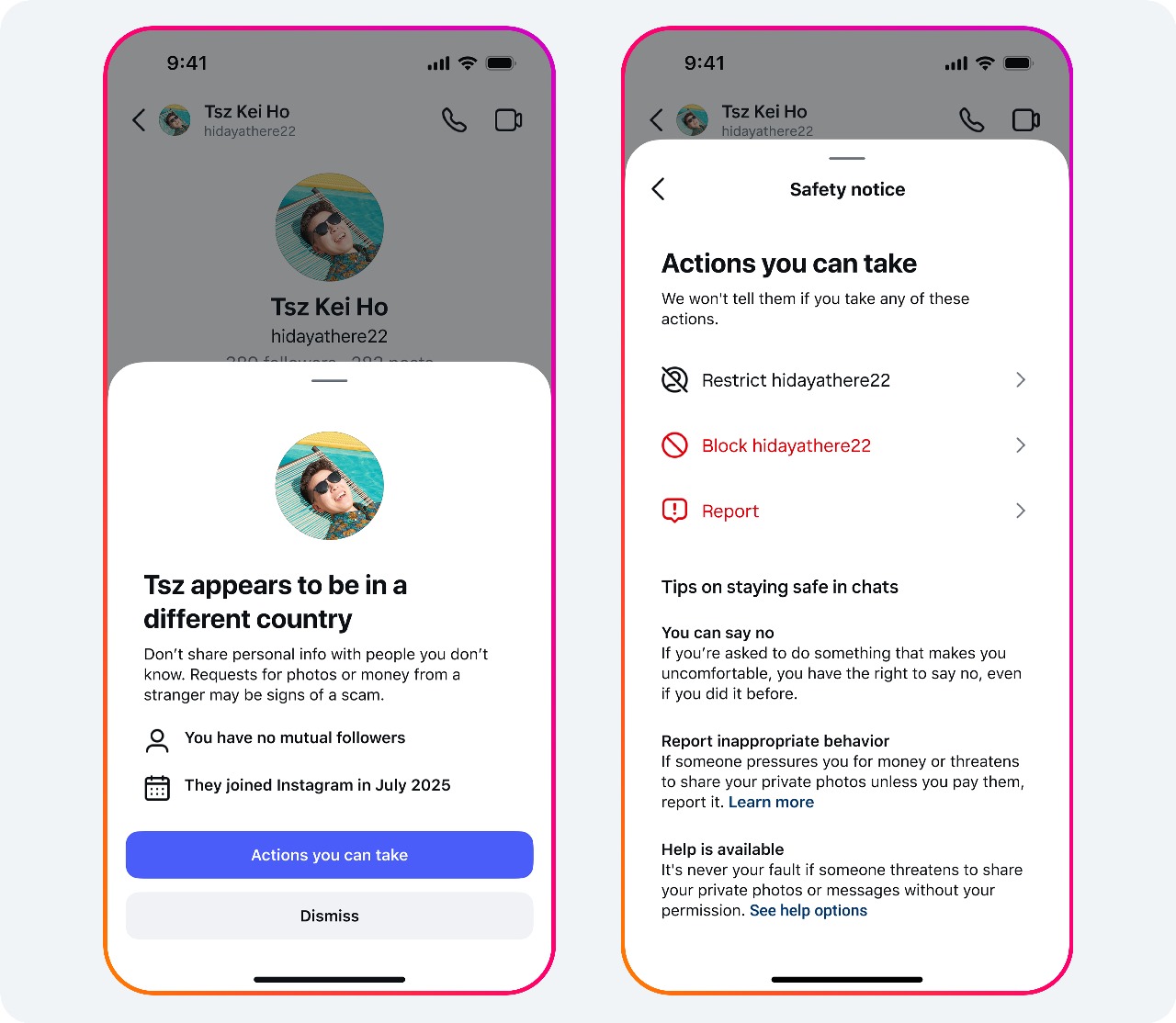

Meta is also taking steps to combat cross-border sextortion scams by rolling out a “Location Notice” feature on Instagram. Over 10% tapped on the notice to learn more about the steps they could take, the alert helps people identify when they’re chatting with someone located in another country, often a tactic used to exploit young people.

Nudity protection, a key feature that automatically blurs suspected nude images in DMs, remains widely adopted. As of June, 99% of people, including teens, kept this setting turned on. The feature also reduced the likelihood of people forwarding explicit content, with nearly 45% deciding not to share such images after receiving a warning.

For accounts run by adults that prominently feature children, such as those managed by parents or talent agents, Meta is now rolling out Teen Account-level protections. These include turning on strict message controls and Hidden Words to automatically filter offensive comments. The goal is to prevent unwanted or inappropriate contact before it happens.

Additionally, Meta will reduce the visibility of these accounts to suspicious people , making them harder to find via search or recommendation. This complements previous efforts like removing the ability for such accounts to accept gifts or offer paid subscriptions.

In a continued crackdown, Meta removed nearly 135,000 Instagram accounts for leaving sexualized comments or requesting sexual images from adult-managed accounts featuring children under 13, and also removed an additional 500,000 Facebook and Instagram accounts that were linked to those original accounts. Meta is working closely with other tech companies via the Tech Coalition’s Lantern program to prevent harmful actors from resurfacing on other platforms.

SOCIAL COPY

Meta Facebook Pages

We’re strengthening our safety tools to better protect teens and kids on @Instagram.

Our latest updates include:

🔒 New DM features to help teens spot scams and unwanted contact

🌍 Location notices to reduce cross-border sextortion risks

🚫 Nudity protection is on by default for teens

👨👧 More protections for adult-run accounts featuring children

These efforts reflect our commitment to building safer experiences for young people and supporting families in the digital age.

Learn more: https://about.fb.com/news/2025/07/expanding-teen-account-protections-child-safety-features/

#MetaPakistan #OnlineSafety #InstagramSafety