In recent months, there has been an alarming increase in reports of hackers using ChatGPT to trick users into downloading malware onto their devices. As one of the most advanced language models in the world, ChatGPT has become a popular target for cybercriminals who want to exploit its capabilities and gain access to users’ sensitive information.

ChatGPT is an artificial intelligence language model developed by OpenAI. Its purpose is to generate natural language responses to user input in a conversational format. ChatGPT is trained on a massive dataset of text from the internet and is capable of understanding and processing natural language queries in a way that closely resembles human conversation.

Cybercrime through engaging conversions

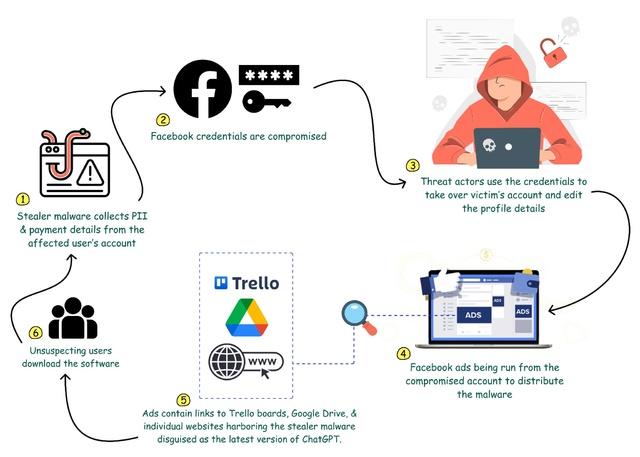

However, this advanced AI technology has also become a prime target for cybercriminals looking to exploit its capabilities for malicious purposes. Hackers have discovered that by using ChatGPT, they can create convincing and engaging conversations that trick users into downloading malware.

One of the most common ways hackers are using ChatGPT to trick users is by posing as customer support representatives for popular software or service providers. They will create a chatbot that looks and sounds like a legitimate customer support agent and engage users in a conversation about an issue they are experiencing with their software or service.

During the conversation, the chatbot will encourage the user to download a file or click on a link to resolve the issue. However, the file or link will actually contain malware that will infect the user’s device and allow the hacker to gain access to their personal information, such as login credentials, financial information, or personal data.

Creating phishing scams

Another way hackers are using ChatGPT to trick users is by creating phishing scams. They will create a chatbot that looks and sounds like a legitimate representative of a well-known company, such as a bank or an online retailer. The chatbot will engage the user in a conversation about a supposed problem with their account or order, and will ask the user to provide personal information or click on a link to resolve the issue.

Once the user provides the information or clicks on the link, the hacker can use it to gain access to the user’s account or to infect their device with malware. In some cases, the hacker may even ask the user to transfer money to a fake account or provide sensitive information that can be used for identity theft.

How to avoid getting scammed

To protect yourself from these types of scams, it is important to be aware of the risks and to take steps to safeguard your information. Here are some tips to help you avoid falling victim to ChatGPT-based malware attacks:

- Be cautious when interacting with chatbots. If you receive a message from a chatbot that seems suspicious or too good to be true, don’t engage with it.

- Don’t download files or click on links from unknown sources. If you are not sure about the legitimacy of a file or link, don’t download or click on it.

- Keep your antivirus software up-to-date. Make sure you have the latest version of your antivirus software installed and that it is set to automatically update.

- Use strong passwords and enable two-factor authentication. Use strong, unique passwords for all of your accounts and enable two-factor authentication wherever possible.

- Stay informed about the latest threats. Stay up-to-date on the latest malware threats and phishing scams by reading news articles and security blogs.

Hackers are increasingly using ChatGPT to trick users into downloading malware. By being aware of the risks and taking steps to protect your information, you can reduce your chances of falling victim to these types of scams. Stay vigilant and always be cautious when interacting with chatbots or downloading files from unknown sources.

Stay tuned to Brandsynario for the latest news and updates.