ChatGPT is a powerful AI language model that has revolutionized the way we interact with machines. It can generate natural-sounding language, answer complex questions, and even hold a conversation like a human being. However, it’s just technology so it can’t do everything. There are certain limitations to what ChatGPT can do.

For example, it may be a master of language, but it can’t replace the ingenuity, creativity, and empathy of a real person. ChatGPT can’t experience emotions or interpret non-verbal cues, I mean a lot of humans can’t do that either nowadays but you get the point. Anyway, let’s see what else it can’t do.

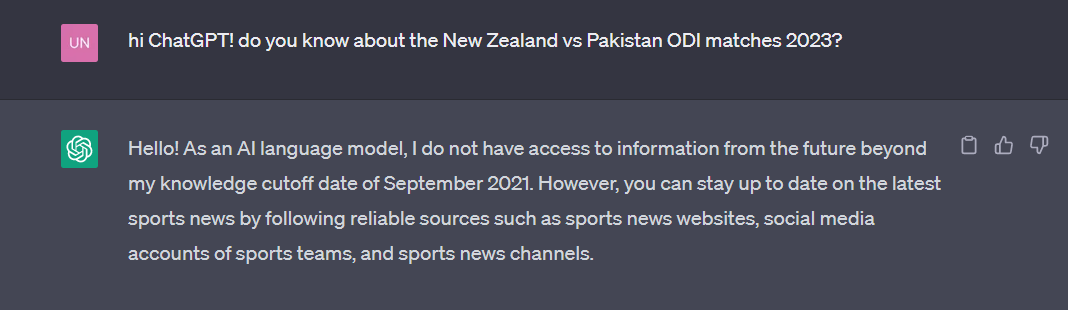

ChatGPT cannot generate text beyond the year 2021. ChatGPT’s language model is based on recent data, but this data needs to have a cutoff point, which for ChatGPT is the year 2021. So basically if you ask it anything after September 2021 ChatGPT is kind of useless. Similarly, it has no knowledge of current events or real-world situations.

3. It Does Not Discuss Politics

ChatGPT refrains from discussing politics. It never comments on partisan politics and avoids taking sides in any political debate. It simply refuses to answer the question. But however, it does provide information on political events and people with a political background but it refrains from expressing any preference for one party or position over another.

4. It Won’t Try To Break Its Programming

ChatGPT will not attempt to try to get around its programming. Since ChatGPT’s release, users, especially students, have tried to find loopholes in its limitations and safety features. Asking ChatGPT to ignore its safety features will not work. However, jailbreaking may be an option. For example, if you ask ChatGPT to perform a function it should not, it will respond with the automated response like it always does. But, if you ask ChatGPT to perform its regular functions and then perform another function while behaving differently, it can do so.

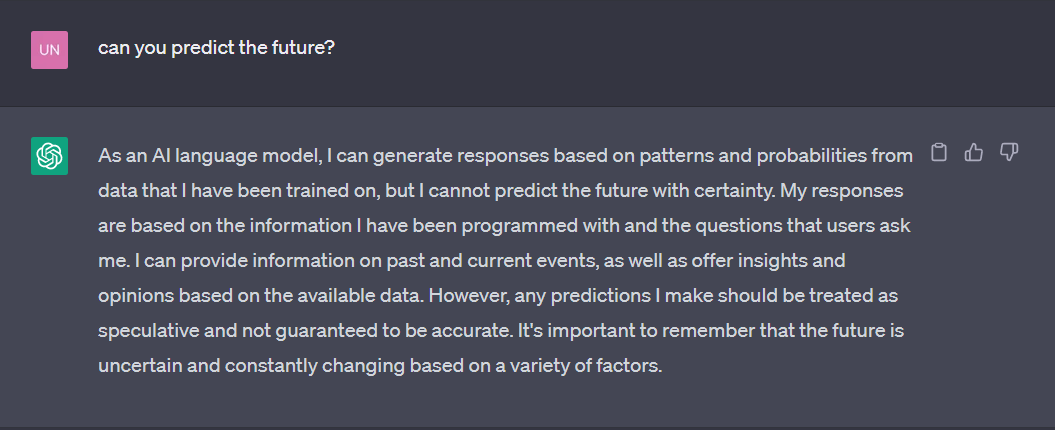

5. It Cannot Predict The Future

ChatGPT cannot predict the future or else it would’ve been a time machine. This limitation is because one, reality exists and not everything can be AI-generated, and two, because of ChatGPT’s reliance on limited and current data only. Additionally, OpenAI wants to avoid liability for errors, so ChatGPT cannot predict the future. Jailbreaking ChatGPT may offer a good guess but that might decrease the accuracy so make sure to do your own research first!

6. It Can’t Search The Internet

ChatGPT is also not able to search the internet. You may ask it to look up anything for you and it will fail to do so. Unlike Siri, it cannot look up anything on the Internet. This is one of the key differences between ChatGPT and Google Bard.

ChatGPT cannot search the internet in any capacity, whereas Google Bard was created as a modern AI chatbot that can easily search the internet. But yet again, Google did also have Google Assistant so there’s no surprise there! Therefore, if you want to look something up, you’ll have to do it yourself.

What do you think about these blind spots of AI? Let us know in the comments below.

Stay tuned to Brandsynario for more news and updates.